Pssst… have you heard about Google Search Console’s BigQuery integration??

The thing I enjoy most about SEO is thinking at scale. Working at Postmates was fun because sometimes it’s more appropriate to size opportunities on a logarithmic scale than a linear one.

But there is a challenge that comes along with that: opportunities scale logarithmically, but I don’t really scale… at all. That’s where scripting comes in.

SQL, Bash, Javascript, and Python regularly come in handy to identify opportunities and solve problems. This example demonstrates how scripting can be used in digital marketing to solve the challenges of having a lot of potentially useful data.

Want help? I offer technical SEO and analytics consulting services to augment digital marketing SEO programs.

Scaling SEO with the Google Search Console API

Most, if not all, big ecommerce and marketplace sites are backed by databases. And the bigger these places are, the more likely they are to have multiple stakeholders managing and altering data in the database. From website users to customer support to engineers, there are several ways that database records can change. As a result, the site’s content grows, changes, and sometimes disappears.

It’s very important to know when these changes occur and what effect the changes will have on search engine crawling, indexing, and results. Log files can come in handy, but the Google Search Console is a pretty reliable source of truth for what Google sees and acknowledges on your site.

Getting Started

This guide will help you start working with the Google Search Console API, specifically with the Crawl Errors report, but the script could easily be modified to query Google Search performance data or interact with sitemaps in GSC.

Want to learn about how APIs work? See: What is an API?

To get started, clone the GitHub Repository: https://github.com/trevorfox/google-search-console-api and follow the “Getting Started” steps on the README page. If you are unfamiliar with Github, don’t worry. This is an easy project to get you started.

Make sure you have the following:

- Python 3 and Pip (or be ready to make some modifications for Python 2.x)

- A clone of the repository setup according to the README

- A Google API Console Project with the Google account tied to your Google Search Console

- API Credentials

Now for the fun stuff!

Connecting to the API

This script uses a slightly different method to connect to the API. Instead of using the Client ID and Client Secret directly in the code. The Google API auth flow accesses these variables from the client_secret.json file. This way you don’t have to modify the webmaster.py file at all, as long as the client_secret.json file is in the /config folder.

try:

credentials = pickle.load(open("config/credentials.pickle", "rb"))

except (OSError, IOError) as e:

flow = InstalledAppFlow.from_client_secrets_file('client_secret.json', scopes=OAUTH_SCOPE)

credentials = flow.run_console()

pickle.dump(credentials, open("config/credentials.pickle", "wb"))

webmasters_service = build('webmasters', 'v3', credentials=credentials)

For convenience, the script saves the credentials to the project folder as a pickle file. Storing the credentials this way means you only have to go through the Web authorization flow the first time you run the script. After that, the script will use the stored and “pickled” credentials.

Querying Google Search Console with Python

The auth flow builds the “webmasters_service” object, which allows you to make authenticated API calls to the Google Search Console API. This is where Google documentation kinda sucks… I’m glad you came here.

The script’s webmasters_service object has several methods. Each one relates to one of the five ways you can query the API. The methods all correspond to verb methods (italicized below) that indicate how you would like to interact with or query the API.

The script currently uses the “webmaster_service.urlcrawlerrorssamples().list()” method to find how many crawled URLs had given type of error.

gsc_data = webmasters_service.urlcrawlerrorssamples().list(siteUrl=SITE_URL, category=ERROR_CATEGORY, platform='web').execute()

It can then optionally call “webmaster_service.urlcrawlerrorssamples().markAsFixed(…)” to note that the URL error has been acknowledged- removing it from the webmaster reports.

Google Search Console API Methods

There are five ways to interact with the Google Search Console API. Each is listed below as “webmaster_service” because that is the variable name of the object in the script.

webmasters_service.urlcrawlerrorssamples()

This allows you to get details for a single URL and list details for several URLs. You can also programmatically mark URL’s as Fixed with the markAsFixed method. *Note that marking something as fixed only changes the data in Google Search Console. It does not tell Googlebot anything or change crawl behavior.

The resources are represented as follows. As you might imagine, this will help you find the source of broken links and get an understanding of how frequently your site is crawled.

{

"pageUrl": "some/page-path",

"urlDetails": {

"linkedFromUrls": ["https://example.com/some/other-page"],

"containingSitemaps": ["https://example.com/sitemap.xml"]

},

"last_crawled": "2018-03-13T02:19:02.000Z",

"first_detected": "2018-03-09T11:15:15.000Z",

"responseCode": 404

}

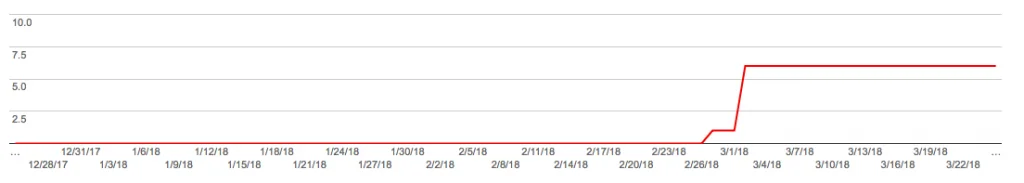

webmasters_service.urlcrawlerrorscounts()

If you get this data, you will get back the day-by-day data to recreate the chart in the URL Errors report.

webmasters_service.searchanalytics()

This is probably what you are most excited about. This allows you to query your search console data with several filters and page through the response data to get way more data than you can get with a CSV export from Google Search Console. Come to think of it, I should have used this for the demo…

The response looks like this with a “row” object for every record, depending on you queried your data. In this case, only “device” was used to query the data, so there would be three “rows,” each corresponding to one device.

{

"rows": [

{

"keys": ["device"],

"clicks": double,

"impressions": double,

"ctr": double,

"position": double

},

...

],

"responseAggregationType": "auto"

}

webmasters_service.sites()

Get, list, add and delete sites from your Google Search Console account. This is perhaps really useful if you are a spammer creating hundreds or thousands of sites that you want to be able to monitor in Google Search Console.

webmasters_service.sitemaps()

Get, list, submit, and delete sitemaps to Google Search Console. If you want to get into fine-grain detail to understand indexing with your sitemaps, this is the way to add all of your segmented sitemaps. The response will look like this:

{ "path": "https://example.com/sitemap.xml", "lastSubmitted": "2018-03-04T12:51:01.049Z", "isPending": false, "isSitemapsIndex": true, "lastDownloaded": "2018-03-20T13:17:28.643Z", "warnings": "1", "errors": "0", "contents": [ { "type": "web", "submitted": "62", "indexed": "59" } ] }

Modifying the Python Script

You might want to change the Search Console Query or do something with response data. The query is in webmasters.py and you can change the code to iterate through any query. The check method checker.py is used to “operate” on every response resource. It can do things that are a lot more interesting than printing response codes.

Query all the Things!

I hope this helps you move forward with your API usage, python scripting, and Search Engine Optimization… optimization. Any question? Leave a comment. And don’t forget to tell your friends!

Visualize your Google Search Console data for free with Keyword Clarity. Import your keywords with one click and find patterns with interactive visualizations.

Pingback: Google Search Console Bulk Export for BigQuery: The Complete Guide • Trevor Fox